Powerful generative AI tools are suddenly everywhere, embedded in many of the learning platforms students use daily. For students, they offer an irresistible shortcut: why write the essay, solve the complex math problem, or read the chapter when a chatbot can do it for you in seconds? Schools across the U.S. are scrambling to adapt. Blue books are back. So too are in-class exams and No. 2 pencils. Running student work through anti-AI checkers is standard practice. These are all pragmatic strategies by harried educators who, along with families, are on the front lines, mediating the next tidal wave of technological innovation for their students.

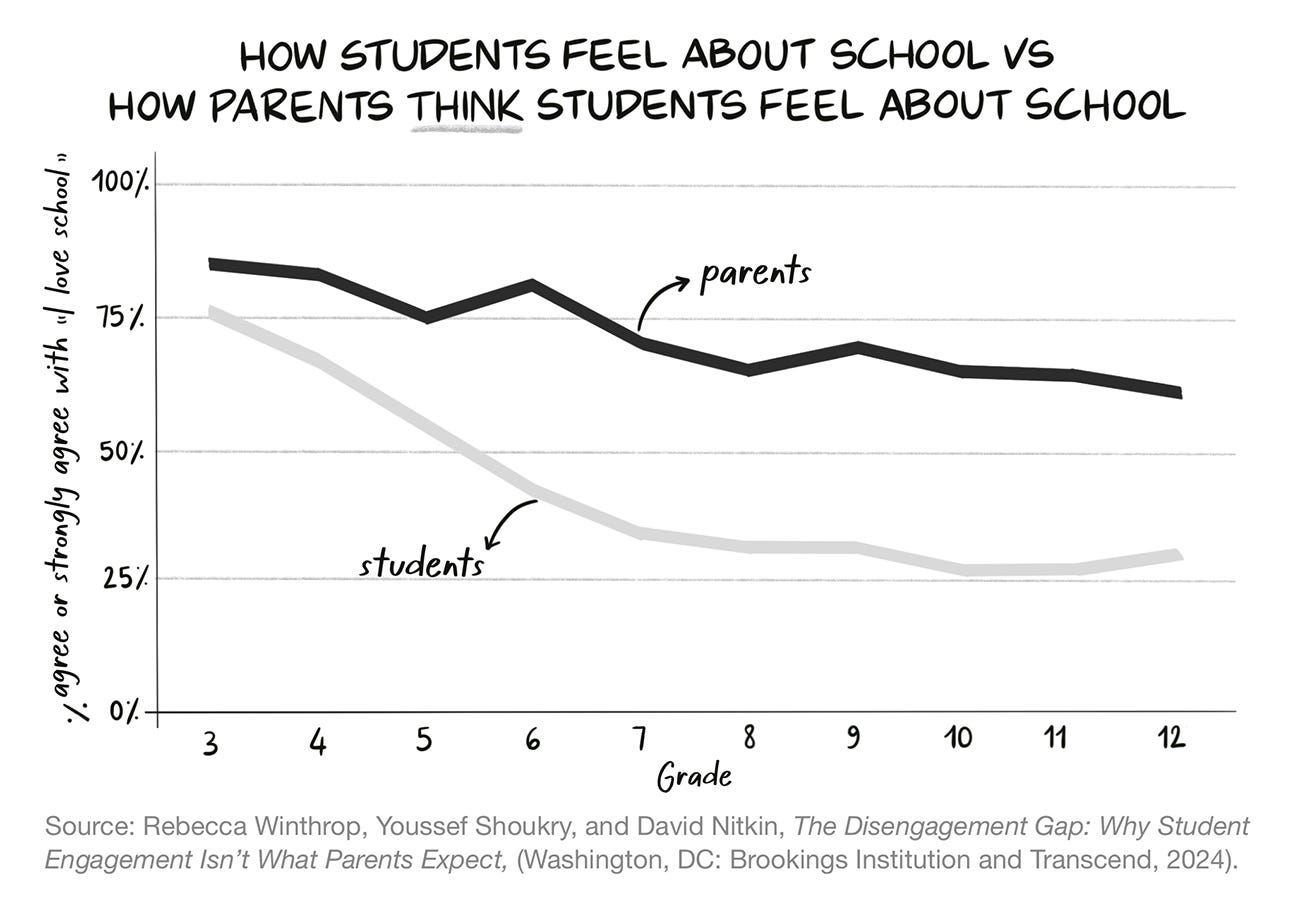

But these practical solutions miss a major underlying issue. The majority of American students are disengaged at school — a trend that began long before generative AI arrived. According to the U.S. census, only one in three students are highly engaged in school, a number that has been stubbornly consistent over the last decade. And while 65% of parents believe their 10th graders love school, only 26% of students actually say they do.

AI didn’t create this crisis, but it raises the stakes considerably. AI chatbots promise to reduce the “friction” of learning by teaming up with the student 24/7. But this friction isn’t a flaw that needs to be engineered away, it’s the whole point. The effort of working something out, of sitting with a challenge and finding a way through, is an essential part of the learning process. It’s what keeps students engaged, and engagement is both a prerequisite for real learning and a predictor of outcomes that reach far beyond the classroom, including higher graduation rates and life aspirations, and lower rates of depression and substance use disorder. In a world saturated with AI, the capacity to learn — to cultivate genuine curiosity, push through difficulty, and develop independent thinking — is the essential human skill. And it’s one we can still help students build.

The good news is that student engagement isn’t a mystery, and parents and teachers have more influence over it than they realize. When students have the agency and freedom to follow their own curiosity, engagement follows naturally. The key is knowing how to help kids get there.

The Four Modes of Student Engagement

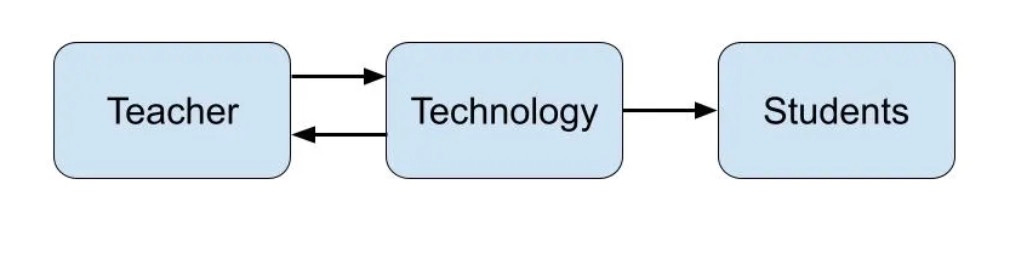

Academics agree that a combination of several elements shape student engagement:

What students do (e.g., showing up, turning in homework)

What students think (e.g., making connections between classroom learning and experiences out of school)

What students feel (e.g., showing interest in what they are learning and enjoying school)

Whether students take initiative (e.g., proactively finding ways to make learning more interesting, such as asking to write a paper on a topic they love versus the one that is assigned. This, in particular, is an essential skill in an AI-infused world.)

Because much of this is internal, it can be hard to see. So teachers and parents often rely on external behavior and outcomes as their gauges. But grades and attendance only tell part of the story — and they lead well-meaning parents to encourage compliance rather than real engagement.

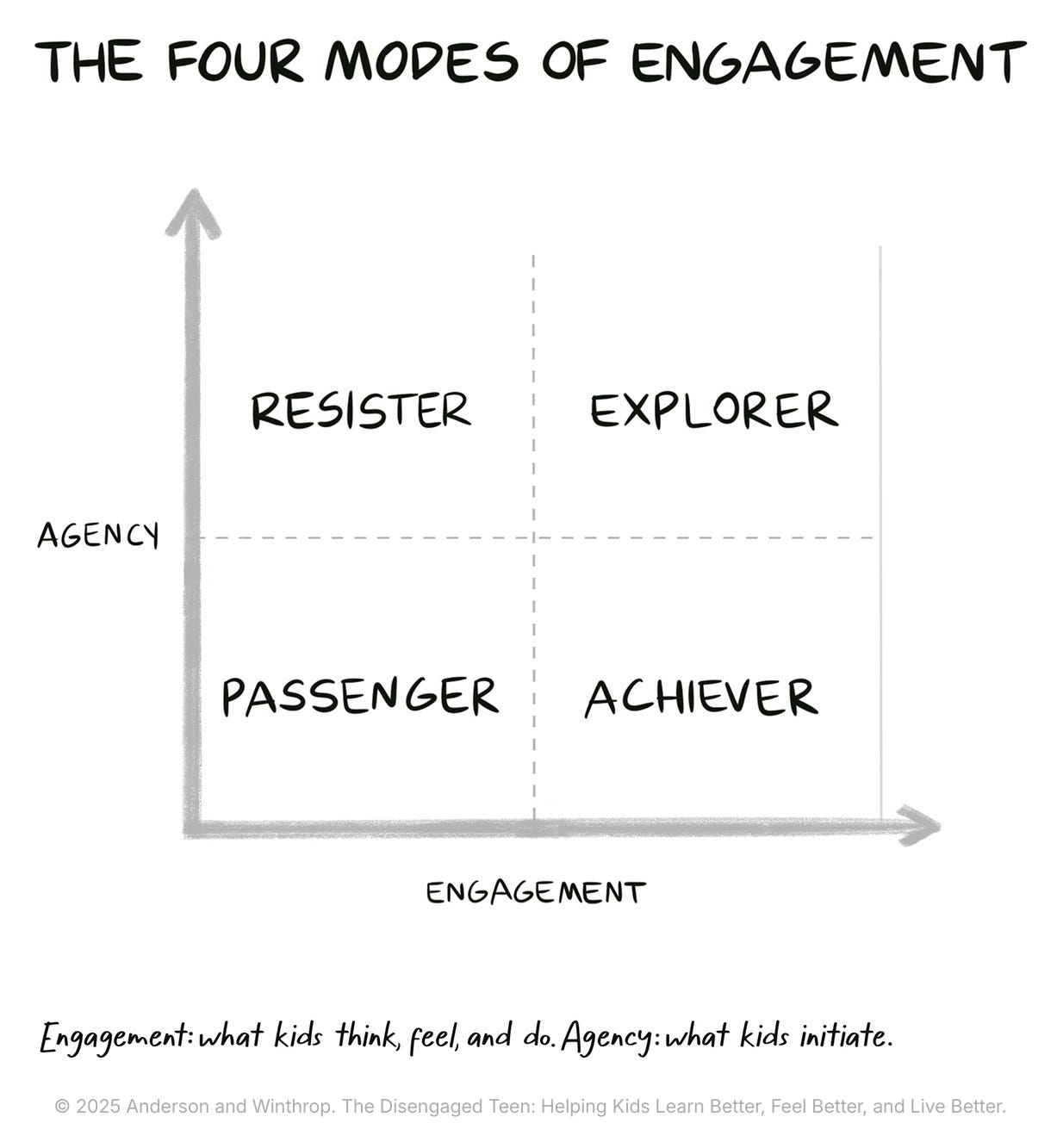

That’s where a clearer framework helps. In our research for our recent book, The Disengaged Teen, we identified four distinct modes of student engagement and disengagement in school: Passenger, Achiever, Resister, and Explorer. These modes give teachers and parents language and a deeper understanding of where their students get stuck, and offer practical tools to help reignite their motivation.

In Passenger mode, students are coasting — doing the bare minimum. Parents of kids in Passenger mode often get a one-word response from their kids when they ask about school: “boring.” Students in Passenger mode may rush through homework and barely study for exams, yet some still get straight As. For these students, school can feel too easy, offering little challenge or excitement. Their coping strategy is to check out and focus on friends, gaming, sports — anything more interesting. For students stuck here, AI is an easy shortcut to finish homework faster and get back to hanging out.

Students in Achiever mode are trying to get a gold star on everything — academics, extracurriculars, service, you name it. They are driven and impressive but often exhausted. Fear of failure haunts these students, and many wilt when their performance dips even slightly. A common frustration among students in Achiever mode is, “My teacher didn’t tell me exactly what to do to get an A.” A B+ can trigger alarm, extra studying, late nights, and lost sleep. Achievers are focused on the end goal — the grade — not the learning process, and for many, cheating was already common before AI came along. Now, chatbots make it even easier to power through their pile of work.

Students in Resister mode use whatever influence they have to signal — to both teachers and families — that school isn’t working for them. Some actively avoid learning. Others disrupt their learning by derailing lessons and acting out, becoming the “problem child.” But they have something going for them that students in Passenger mode do not: agency. They aren’t taking their lot lying down, they are influencing the flow of instruction, though in a negative way. If given the chance, we found that those in Resister mode can move to Explorer mode — the final and most engaged mode — more quickly than students stuck in Passenger or Achiever mode.

The peak of the engagement mountain is Explorer mode, where students develop the willingness and desire to learn new things. Here students’ agency meets their drive. Their involvement runs deep and they find meaning in the effort required to learn. Explorer mode includes the active curiosity Jonathan Haidt calls “Discover Mode.” Students in Explorer mode feel confident enough to take creative risks, generate their own ideas, and solve problems in the classroom. When a student in Explorer mode is asked “How was school?,” their answer is not a monosyllabic “fine” but an excited breakdown of how tornados work or how they calculated Taylor Swift’s net worth using newly acquired math skills.

A key way to build engagement is to give students some autonomy in the classroom. Across 35 randomized controlled trials in the U.S. and 17 other countries over three decades, when teachers give students opportunities to engage by having a small say in the flow of instruction — such as choosing among homework options, providing feedback at the end of a lesson, or asking questions about their curiosities — learning, achievement, positive self-concept, prosocial behavior, and numerous other benefits increase. To develop initiative, which builds agency, kids need to practice it. For that they need to get into Explorer Mode.

In academic terms, this is agentic engagement — the ability and desire to initiate learning, express preferences, investigate interests, solve problems, and persist in the face of challenges. It is the foundation of a meaningful life and the life skills required to navigate an AI-saturated world.

The modes of engagement are dynamic: students move around them all the time based on their environments, their “efficacy” — i.e., how successful they think they can be — and their emotions. When students are given the freedom to explore, they often take it, and with it they can develop agency. Parents and educators also play a massive role influencing what mode kids show up in, often without knowing it.

The Case of Kia

Kia, one of the students we interviewed for our book, is a classic example of how agency can pull a student back from the disengagement brink. In elementary school, she was vibrant, reading incessantly and debating Percy Jackson plot points with her dad. But by middle school, she was bored, stifled, and completely checked out — stuck squarely in Passenger mode.

Worried about her profound disengagement, a creative teacher tried an unusual strategy: he invited her to join a learner advisory panel to tell the school board what it really felt like to sit at a desk all day. As Kia put it, her brain flipped from “This is useless, and I hate everything,” to “Hold on, maybe I have a say.”

A key way to build engagement is to give students some autonomy in the classroom.

At home, her father — who had gone straight to work after high school — refused to let her intellect become dormant. He treated her questions as worthy, whether she was asking why water towers are round or for a definition of “pedagogy.” He treated her like a thinker even when school made her feel like a failure.

When her school eventually introduced “studios,” where students design their own projects, Kia leaned into her love of storytelling, creating a podcast on mythology and an escape room about presidential assassinations. That agency rewired her, allowing her to fully enter Explorer mode. Even when she later landed in dry, lecture-heavy college classes, she still thrived. “I learned that you can learn anything. You just have to know how you work and how to teach yourself.”

This shift from compliance to choice, from helplessness to agency, supported by her dad and her teachers, took Kia from Passenger mode to Explorer mode and helped her rediscover the curiosity and drive she had in elementary school.

The Exploration Gap

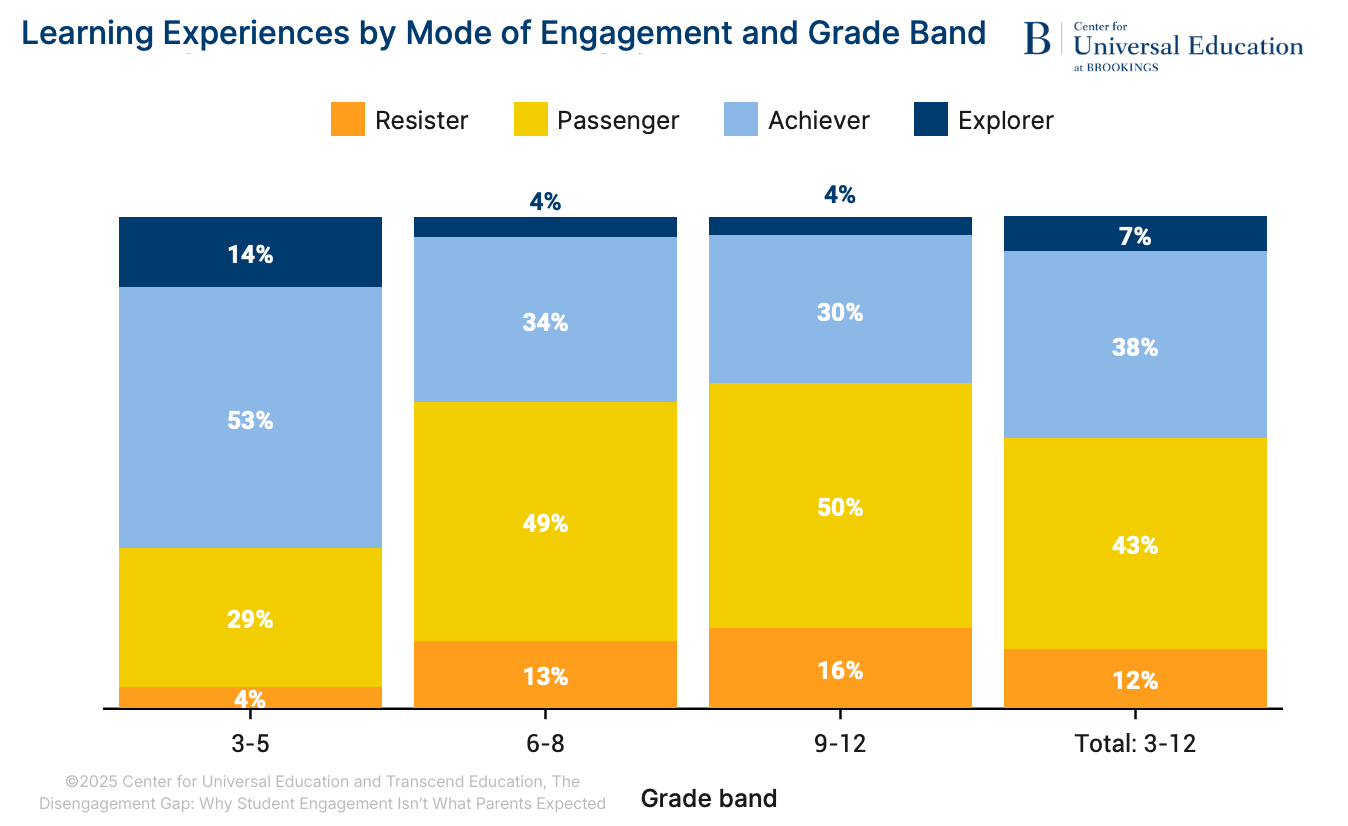

Our Brookings–Transcend study found that fewer than 4% of students in middle and high school regularly had in-school experiences that supported Explorer mode.

The shift in student engagement during the transition from 5th to 6th grade — when most students in the U.S. enter middle school — is striking. More coasting, less achieving, more resisting, and less exploring all characterize the move from elementary to middle school.

Why does this happen? One key factor is lack of agency and a school system that, for most learners, undermines Explorer mode. At a moment of peak brain development, when young people seek meaning about themselves and the world, many are shuffled through classes like factory workers, pounded with content that feels standardized and irrelevant, and pressured to win a race they don’t want to run. Despite all the energy they expend, they increasingly feel like they have little say in how they spend their days.

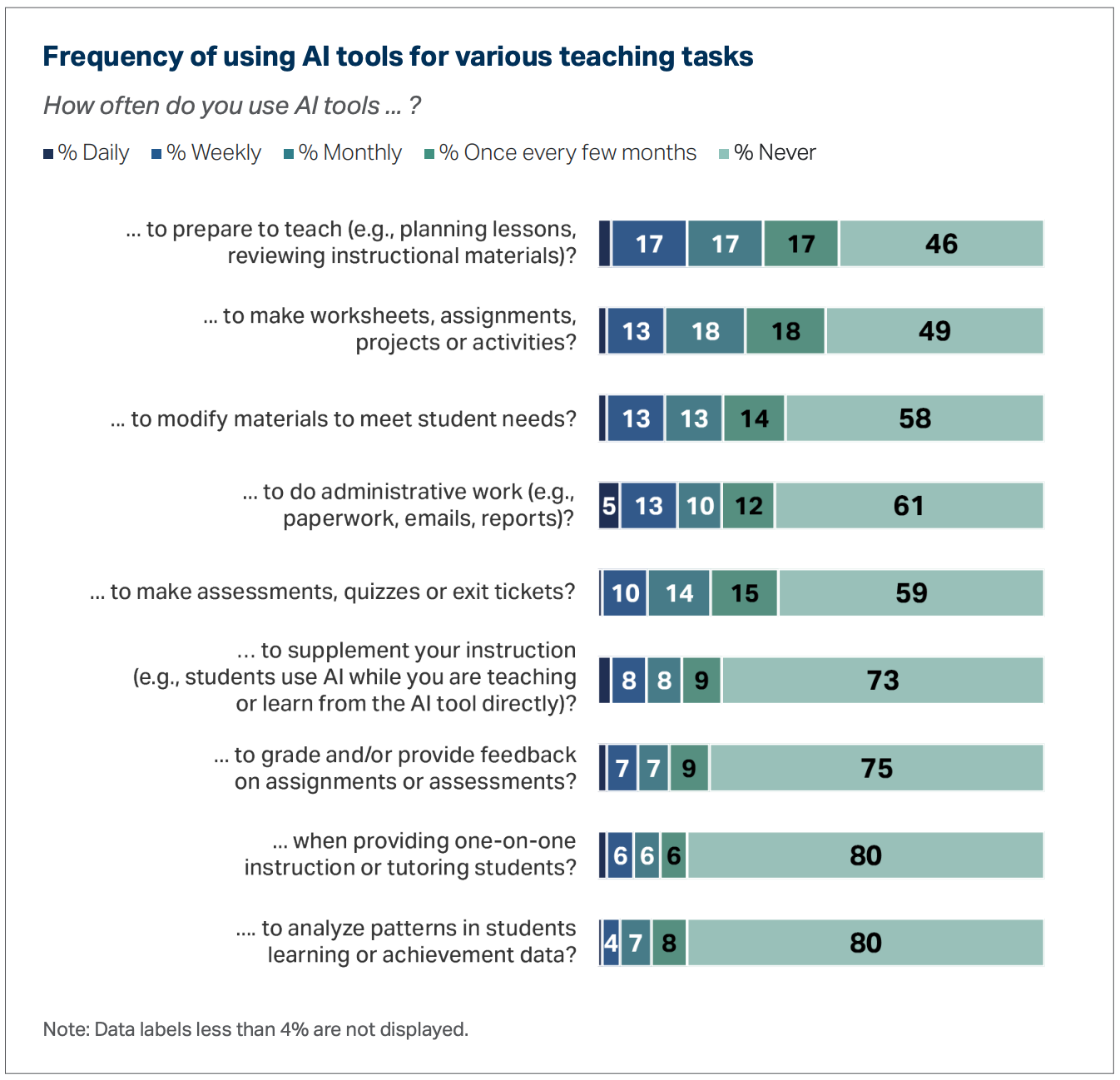

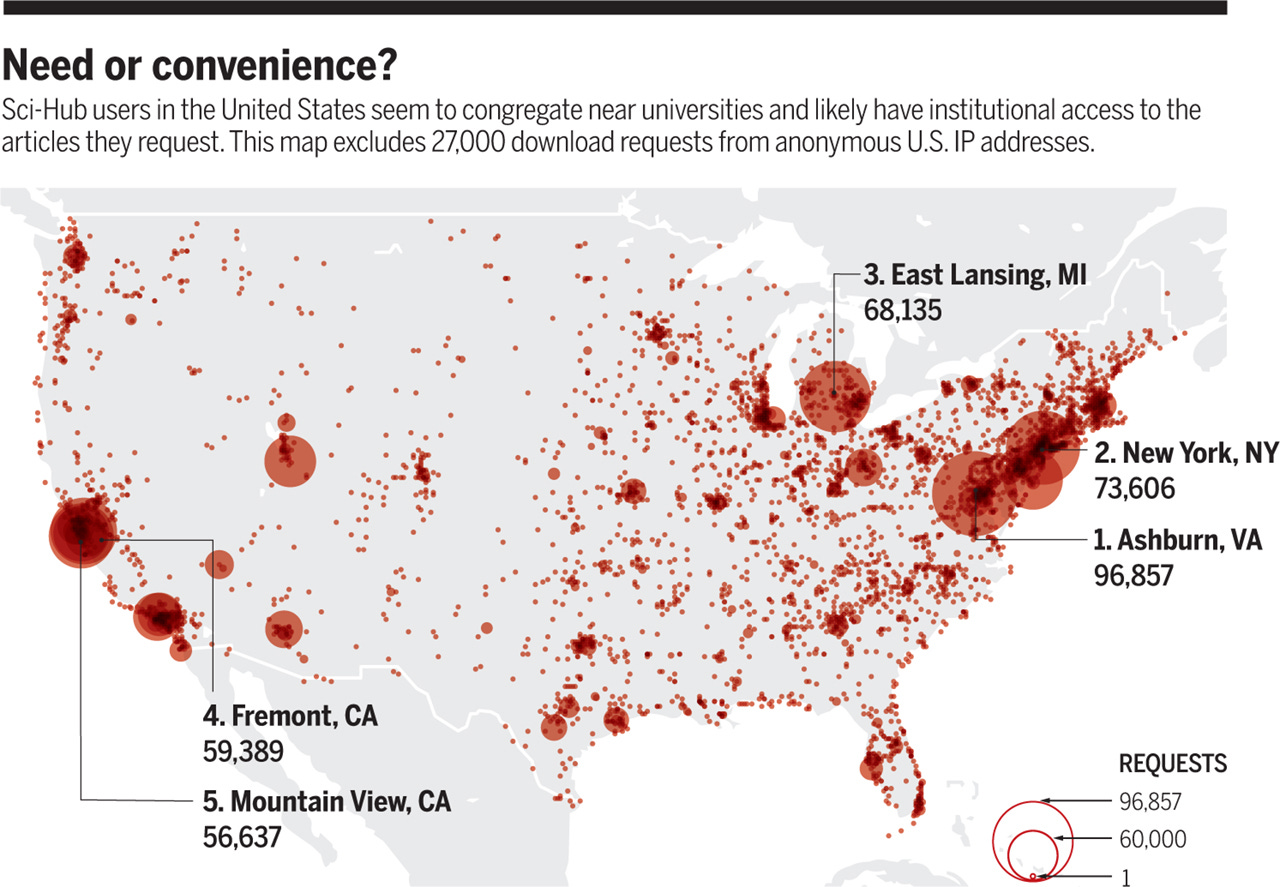

Some have proposed that generative AI could unlock students’ motivations and interests. But recent global Brookings Institution research examining the benefits and risks of AI on student learning found that current use — especially open-ended discussion with AI chatbots and AI “friends”— undermines students’ cognitive development, motivation to learn, and, ultimately, engagement with the material.

The solution is not a better algorithm. It’s centering human connection, creativity, and student agency, which parents and teachers are uniquely positioned to do.

Six Actions to Get Kids into Explorer Mode

When kids are in Explorer mode they are motivated to learn, and motivation is crucial. “Biology doesn’t waste energy,” says Mary Helen Immordino Yang, a psychologist and neuroscientist at the University of Southern California. “We don’t think about things that don’t matter.” Students are motivated by having authentic opportunities to contribute and learn meaningful things.

Families and schools can work together here. When adults at home and educators in school work collaboratively, kids’ outcomes routinely improve. Schools are ten times more likely to improve when this strong collaboration exists. Today, there are six actions families and schools can take to help our children have more Explorer moments.

1. Model the thrill of learning.

“Curiosity is contagious,” writes author Ian Leslie. “So is incuriosity.” It may sound simple, but one of the most powerful ways parents at home can support student engagement is by letting their kids see them having Explorer moments of their own.

John Hattie, a professor from the University of Melbourne, calls this being your child’s “first learner,” namely modeling the thrill of learning in everyday activities. This, much more than parents’ well-intentioned hovering around homework completion, helps students engage and do well in school. When Hattie examined the effects of parental involvement on student achievement across almost two thousand studies covering over two million students around the globe, he found “[w]hen parents see their role as surveillance, such as commanding that homework be completed, the effect size is negative.” In other words, parental nagging and controlling makes things worse, not better.

2. Know your child, know their mode.

Parents and educators will have more success getting their kids and students into Explorer mode if they truly know the child they have in front of them, and tailor their support accordingly. The modes are dynamic, and kids move between them, but when kids get stuck in one mode it can become an identity. The kid in Passenger-mode becomes the “lazy kid,” the kid in Achiever-mode the “smart kid,” the kid in Resister-mode the “problem kid.”

Kids don’t need a label, they each need a slightly different nudge to help move them into Explorer mode. The Engagement Toolkit in our book provides a host of practical strategies unique to each mode. For example, the kid who frequently procrastinates because they’re stuck in Passenger mode may need help developing study and planning skills. The student deep in Achiever mode may need help learning that failure is not the end of the world, something they can develop by taking small risks. Kids in Resister mode often need help developing a pathway out of the rut they are in — what Daphna Oyserman calls a vision of a “future possible self” — as well as a plan to get there.

3. Support ways for young people to make authentic contributions.

Adolescence is a period of profound opportunity as well as vulnerability. Teens ask important questions like “Who am I in the world? What matters to me? Do I matter? What kind of future can I build?” They need actual experiences to get data on the answers and build the muscles of being a respected contributor to a community. Cultural anthropologists call the process of gaining that status “earned prestige.” Too often young people default to social media to seek this status. Families and schools can both counteract that by giving kids opportunities for real-life contributions. When families rely on students to help get dinner made, bedrooms cleaned, the dog walked, or a meal delivered to an elderly neighbor, it helps young people know they matter for more than their latest test grade.

At school, when kids engage in experiential projects like “What happened in that abandoned factory?” or “Who lies beneath a headstone marked with only a single letter?” — as they did in Amenia, New York — they do work that matters in their communities. Connecting learning experiences to real life, from asking questions about how their local economy works to their community’s cultural norms, gives students opportunities to make meaning of their assignments while learning about themselves and their place in the world.

4. Never take away extracurriculars because of poor academic performance.

Too often, schools make participation in extracurricular activities contingent on good grades. A nationally representative survey found that nearly 60 percent of students earning all As participate in arts-related extracurriculars, compared to just over 30 percent of students with Cs and Ds. A similar divide exists in sports. The logic may seem sound — a child struggling with algebra doesn’t need more time on the basketball court. But this approach is misguided.

Struggling students need something to be excited about: a place to explore, connect, and shine. When students discover their “spark,” as the late youth advocate Peter Benson called it, that passion can sustain them through life’s ups and downs and boost engagement in school. If that spark is athletics or theater or music and that gets taken away because of academic performance, most kids in Passenger or Resister mode will not suddenly shift to Explorer mode so they can participate again — they’ll become even more disengaged. To foster more Explorer moments, schools should make extracurricular participation contingent on attendance and positive behavior — not grades.

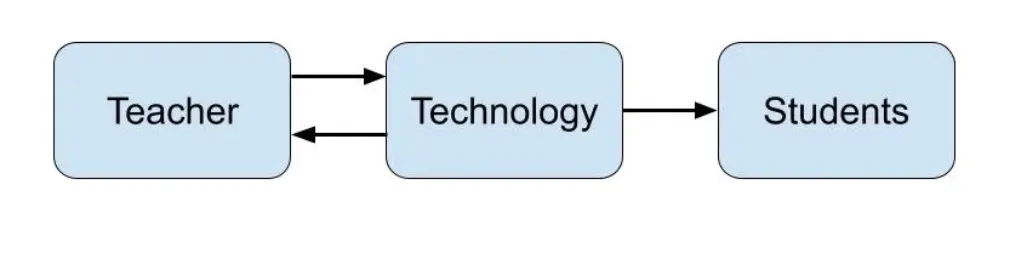

5. Help students manage technology.

Another key is helping students escape the seductive power of tech distractions. Parents can set limits for children’s technology use at home, which can help ensure they are well rested enough to tap into their curiosity and creativity. They can advocate that their students’ school and community limit cell phone and social media use, as Jonathan Haidt recommends in The Anxious Generation. Parents can also check their own tech use at home. Children learn from what we do, more than what we say.

6. Hold a workshop or book group on the four modes of engagement.

You can’t fix what you can’t see. Only a third of 10th graders report having opportunities to develop their own ideas, compared to 69% of parents who think they do. A key aim of our book is to make the invisible — learning and engagement — visible, so we can develop strategies to improve it.

According to our research, teachers and parents want language and tools to talk about learning without creating friction. The four modes can unlock the conversations we need to have with our children and students. Parents can suggest this as a topic for a parents’ evening, a school discussion with educators, or a topic for back-to-school night. So, whether it’s a book club (we have a free study guide) or a webinar, workshop, keynote, or focus group, sparking dialogue about student engagement is a great first step to boosting it.

Conclusion

Despite its powerful role in learning, student engagement is rarely at the center of education discussions. Instead, grades and attendance dominate the conversation. Our hyper-focus on outcomes over inputs is a mistake. Instead of fixating on the leaves of the tree — test scores and grades — we need to tend to the roots, the invisible network of curiosity and motivation that will keep the tree growing.

In an increasingly AI-saturated world, Passenger mode is a seductive trap — a path where thinking is outsourced and agency atrophies. More than ever, we must prioritize student engagement and treat Explorer mode not as a nice-to-have, but as an essential life skill. That means giving kids the agency to follow their genuine curiosity, take creative risks, and find meaning in their own learning. Every student is capable of Explorer mode, we just have to help them get there.