Mario Antoine Aoun

How Generative Models Are Ruining Themselves

DOI:10.1145/3748642

https://bit.ly/4eNTFD0

I argue that with the increased use of generative AI, there will be a decrease in the quality of the generated content because this generated content will be more and more based on artificial and general data.

For instance, automatically generating a new picture will be based on original images authentically generated by persons (such as photographers) plus machine-generated images; however, the latter are not as good as the former in terms of details like contrast and edges. Besides, AI-generated text will be based on original creative content by real persons ‘plus’ machine-generated text, where the latter might be repetitive and standard. Since data generated globally is almost doubling every three years,12 in years to come humanity will produce more data than it has ever created, therefore if the Internet becomes overloaded with AI-generated stuff, then that stuff will affect its (the AI’s) outcome negatively.

AI generative models are trained using Internet data (from sources such as websites, curated content, forums, and social media). People’s interactions with that data—whether by reacting to it, reposting, or endorsing it—will enrich a profusion of unreliable content due to the fact that the origin of such content was unoriginal and AI-generated. Plus, those interactions will be included in future training sets. Those facts will unfavorably influence the results of generative models in the future.

Why and how could this happen? And what can we do about it?

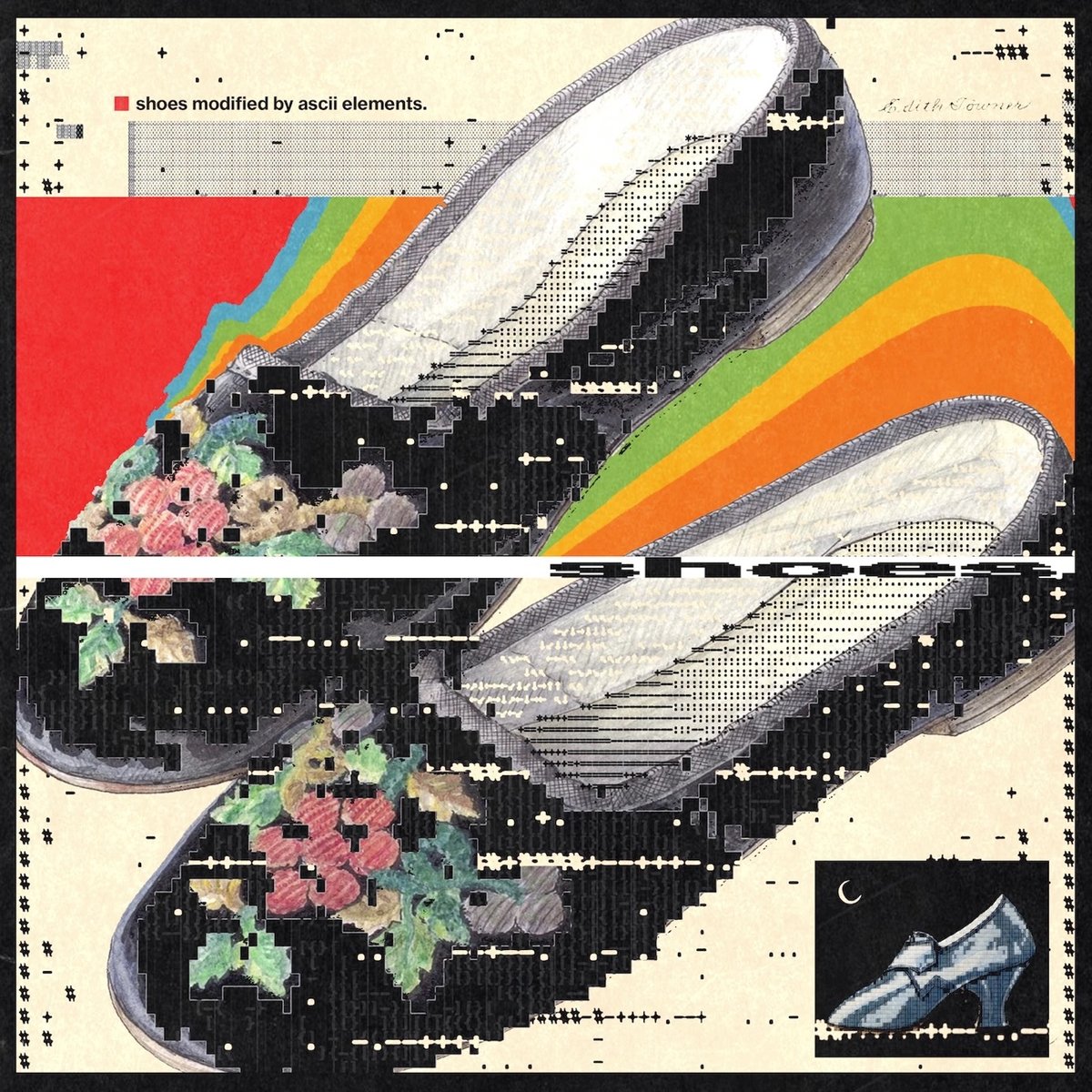

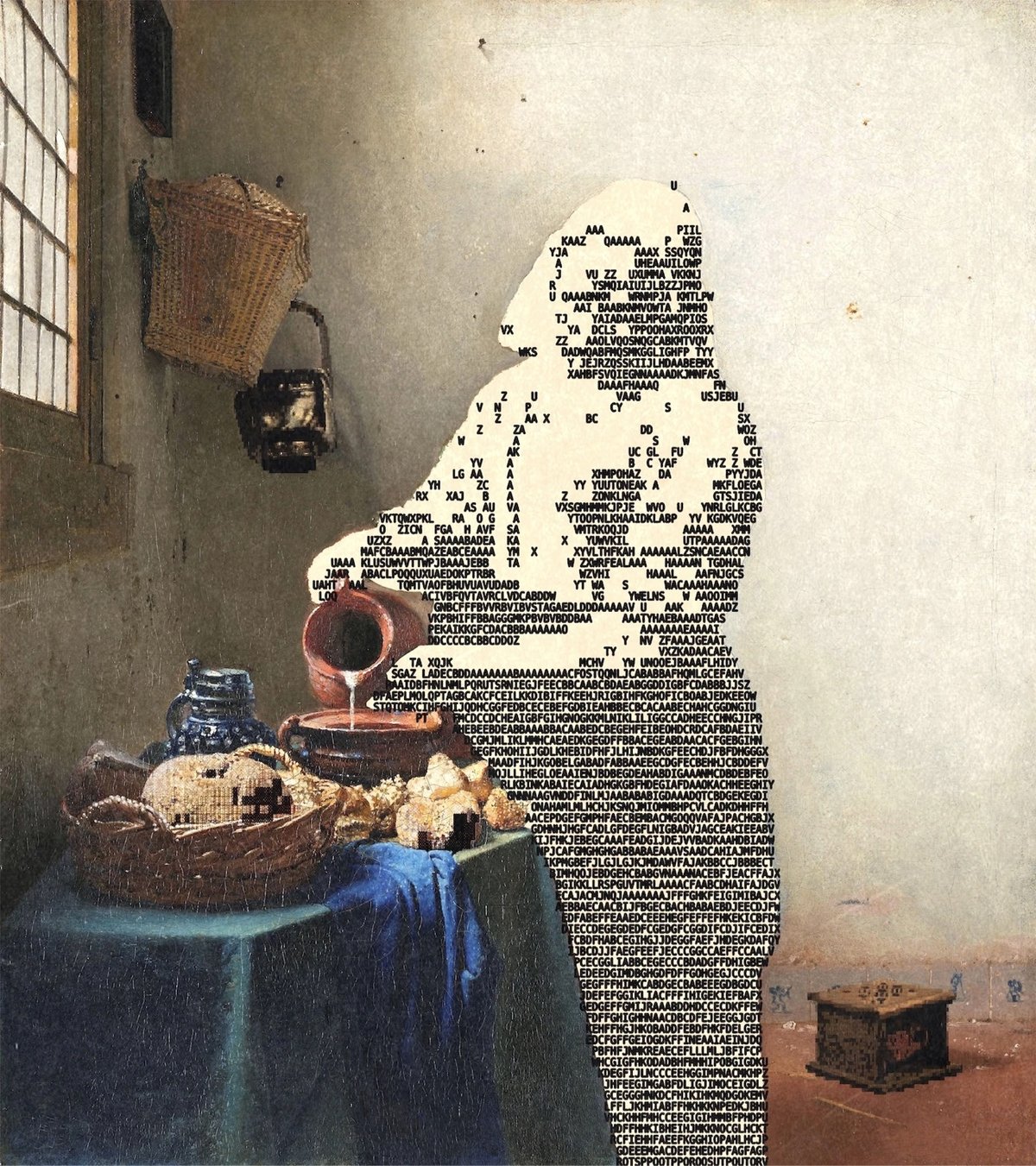

Consider, for example, asking an AI generative model to create an image of the Last Supper. It will successfully do it based on previously encountered paintings of the Last Supper by classical painters. Nonetheless, if we look into the details of any such generated images, we can easily detect discrepancies, specifically in the drawing of hands, fingers, ears, teeth, pupils, and/or other specific tiny prominent details in the foreground, and sometimes in the background. Those details are difficult to realize even by proficient artists.11 Thus, imagine if AI systems are faced with more and more images (photos or paintings) containing unrealistic tiny details due to the difficulty of creating such details or by being filtered or generated using AI, then they will generate outcomes with obvious unrealistic details. This is because generative models are based on artificial neural networks (ANNs) that are essentially function approximators.6 In other words, they are always trying to provide an output based on generalizations they learned from historical inputs. But, this history is continually jeopardized with discrepancies. Better put, generative models are trying to depict reality, but embed glitches from their own inherited generated content. While doing so, their inability to discriminate between efficient and inefficient content makes me argue that they will be inadvertently ruining themselves in the long run.

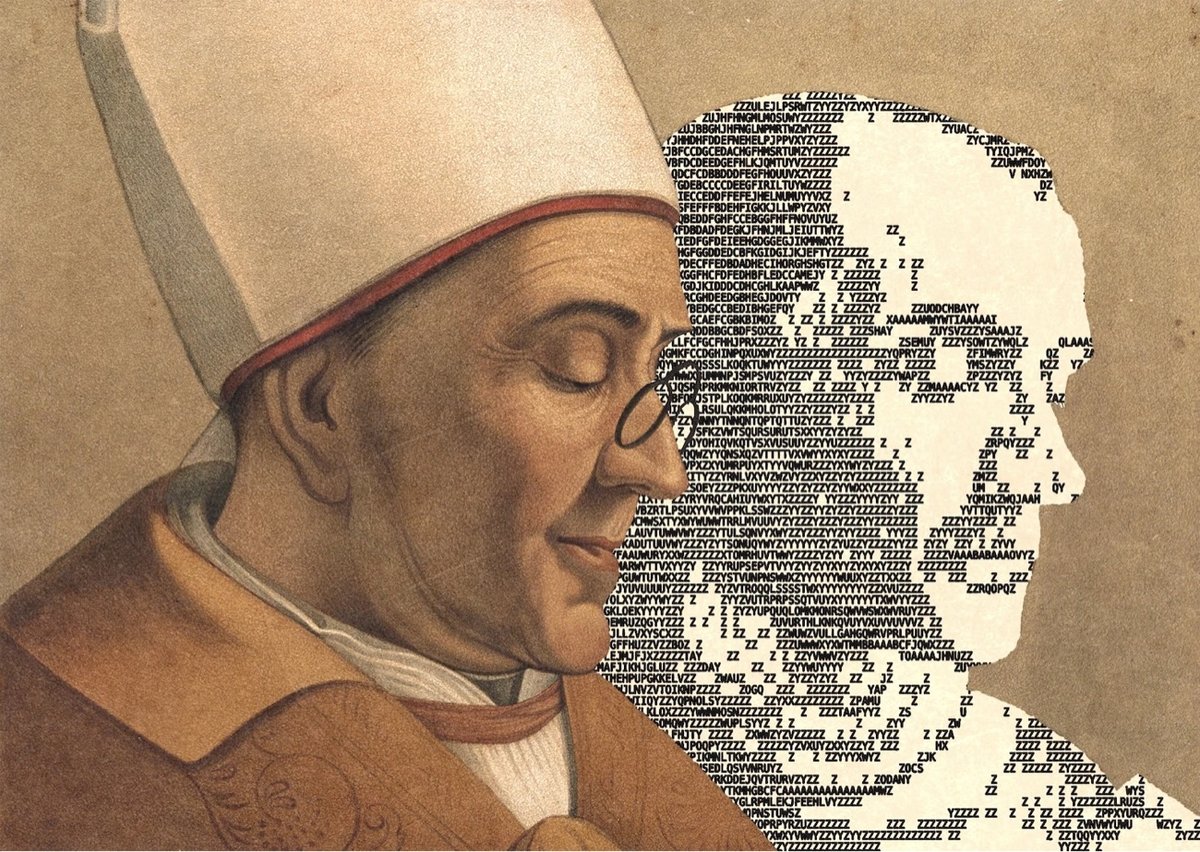

As previously argued,2 generative models are statistical models lacking creative reasoning capabilities or emergent behaviors. Besides, experiments were done such that the output of an AI system was fed back as its input; after many runs, the system output becomes gibberish.10 In addition, generative models are known to produce emotionless,5 neutral,4 low-perplexity,3 and tedious content.9 Also, according to the adage ‘garbage in garbage out’ (GIGO), the quality of any computing system output is subject to its inputs,1 hence if the system is evolving and learning from less-elegant data, then it will result in less-elegant data. Consequently, the proliferation of trivial generative content by AI models will soon create more boring, emotionless, biased results, flawed with discrepancies and unrealistic details. As I already highlighted, ANNs are prone to inputs and ‘perfect’ in generalizations, thus, through their own generative capabilities, they will be negatively mutating the outcomes they will be offering while endorsing impurities from generation to generation (that is, in version updates and training).

One could argue that generative models are well-suited to providing outstanding results in domains such as law exams, for instance, but it should be noted that this is a narrowed domain of application which is way less in its effect when compared to their applicability on a wide spread of knowledge that they will provide or assist in its generation in the public and private domains. It should also be noted that narrowed-down applications of generative models in specific domains might be useful, but here I am addressing the global impact of such models and their own deterioration in a general and long-term future endeavor. In this regard, the ultimate way to contain such data poisoning (for example, flooding the Internet with degenerate content) should be through awareness and responsible use of generative models. For instance, AI-generated content should not be rushed to be posted online, should be very well refined and, even better, checked or enhanced by experts.

Penrose,8 whilst criticizing AI based on classical computation, was also positive for future technological advancements of AI that would enhance its capabilities.8 Similarly, here, I am criticizing AI based on the current available technologies (such as generative models). If, in the future, a different technology takes the stand, then this might alter my critique.

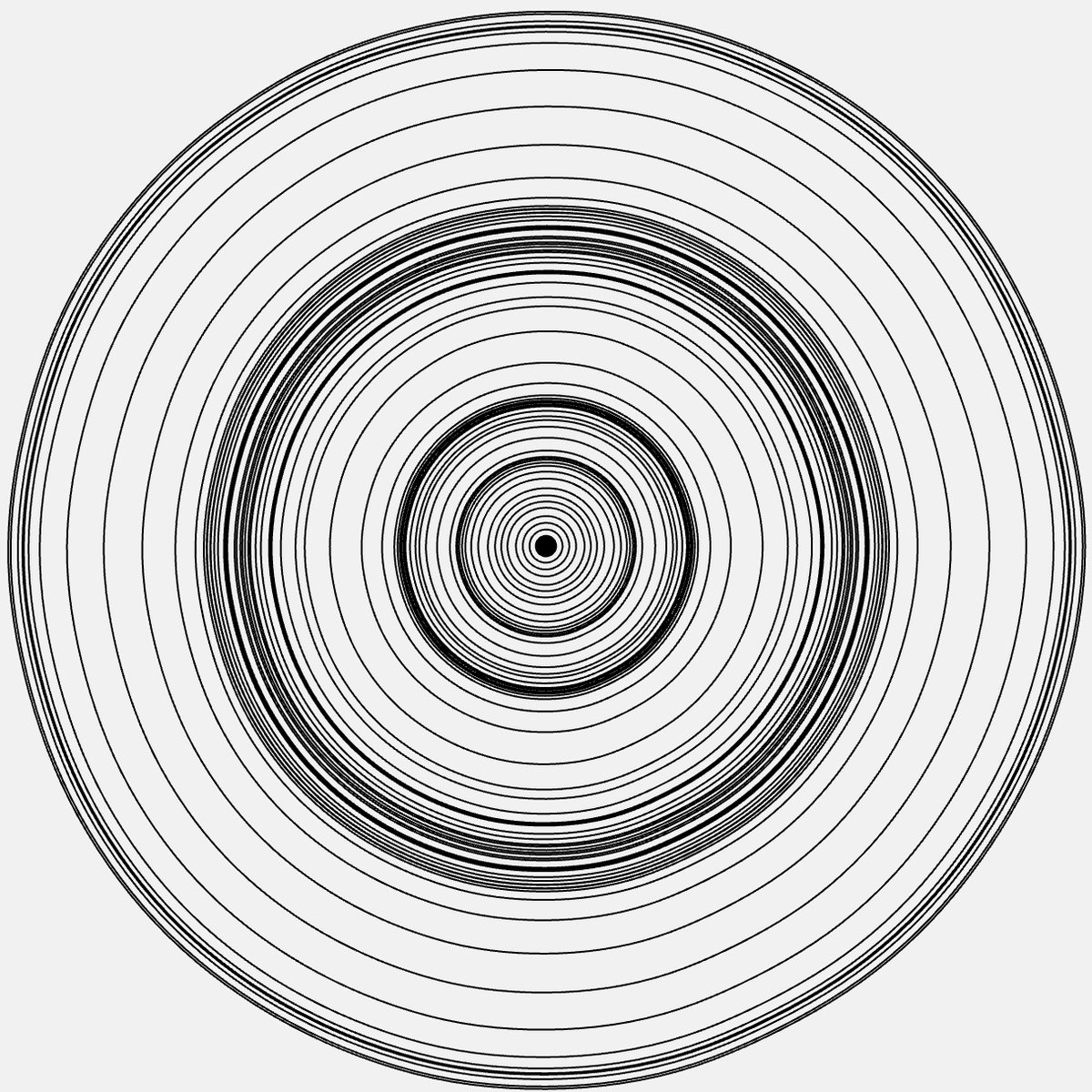

I conclude with the following challenge for generative models or any future technology: Learning the Mandelbrot set image; an ANN that learns from all Mandelbrot set images available on the Internet will never be able to grasp the complex dynamics behind the countless affinities and similarities that are available in the set.7 In fact, it will provide very similar images of the set on a wider scale, but will be short on the details (for example, the periphery will appear blurred and pixelated when zoomed in, but on a true Mandelbrot set, the periphery is always refined). So, is it possible for a machine, one day, to create, understand, and look at something similar to the Mandelbrot Set, or the Mandelbrot Set itself, the same as Benoit Mandelbrot did and had intuition of, or the way anyone of us feels towards its mathematical beauty?