If you ever want a good laugh, ask an academic to explain what they get paid to do, and who pays them to do it.

In STEM fields, it works like this: the university pays you to teach, but unless you’re at a liberal arts college, you don’t actually get promoted or recognized for your teaching. Instead, you get promoted and recognized for your research, which the university does not generally pay you for. You have to ask someone else to provide that part of your salary, and in the US, that someone else is usually the federal government. If you’re lucky—and these days, very lucky—you get a chunk of money to grow your bacteria or smash your electrons together or whatever, you write up your results for publication, and this is where the monkey business really begins.

In most disciplines, the next step is sending your paper to a peer-reviewed journal, where it gets evaluated by an editor and (if the editor sees some promise in it) a few reviewers. These people are academics just like you, and they generally do not get paid for their time. Editors maybe get a small stipend and a bit of professional cred, while reviewers get nothing but the warm fuzzies of doing “service to the field”, or the cold thrill of tanking other people’s papers.

If you’re lucky again, your paper gets accepted by the journal, which now owns the copyright to your work. They do not pay you for this! If anything, you pay them an “article processing charge” for the privilege of no longer owning the rights to your paper. This is considered a great honor.

The journals then paywall your work, sell the access back to you and your colleagues, and pocket the profit. Universities cover these subscriptions and fees by charging the government “indirect costs” on every grant—money that doesn’t go to the research itself, but to all the things that support the research, like keeping the lights on, cleaning the toilets, and accessing the journals that the researchers need to read.

Nothing about this system makes sense, which is why I think we should build a new one. In the meantime, though, we should also fix the old one. But that’s hard, for two reasons. First, many people are invested in things working exactly the way they do now, so every stupid idea has a constituency behind it. Second, our current administration seems to believe in policy by bloodletting: if something isn’t working, just slice it open at random. Thanks to these haphazard cuts and cancellations, we now have a system that is both dysfunctional and anemic.

I see a way to solve both problems at once. We can satisfy both the scientists and the scalpel-wielding politicians by ridding ourselves of the one constituency that should not exist. Of all the crazy parts of our crazy system, the craziest part is where taxpayers pay for the research, then pay private companies to publish it, and then pay again so scientists can read it. We may not agree on much, but we can all agree on this: it is time, finally and forever, to get rid of for-profit scientific publishers.

MOMMY, WHERE DO SCAMS COME FROM?

The writer G.K. Chesterton once said that before you knock anything down, you ought to know how it got there in the first place. So before we show for-profit publishers the pointy end of a pitchfork, we ought to know where they came from and why they persist.

It used to be a huge pain to produce a physical journal—someone had to operate the printing presses, lick the stamps, and mail the copies all over the world. Unsurprisingly, academics didn’t care much about doing those things. When government money started flowing into universities post-World War II and the number of articles exploded, private companies were like, “Hey, why don’t we take these journals off your hands—you keep doing the scientific stuff and we’ll handle all the boring stuff.” And the academics were like “Sounds good, we’re sure this won’t have any unforeseen consequences.”

Those companies knew they had a captive audience, so they bought up as many journals as they could. Journal articles aren’t interchangeable commodities like corn or soybeans—if your science supplier starts gouging you, you can’t just switch to a new one. Adding to this lock-in effect, publishing in “high-impact” journals became the key to success in science, which meant if you wanted to move up, your university had to pay up. So, even as the internet made it much cheaper to produce a journal, publishers made it much more expensive to subscribe to one.

The people running this scam had no illusions about it, even if they hoped that other people did. Here’s how one CEO described it:

You have no idea how profitable these journals are once you stop doing anything. When you’re building a journal, you spend time getting good editorial boards, you treat them well, you give them dinners. [...] [and then] we stop doing all that stuff and then the cash just pours out and you wouldn’t believe how wonderful it is.

So here’s the report we can make to Mr. Chesterton: for-profit scientific publishers arose to solve the problem of producing physical journals. The internet mostly solved that problem. Now the publishers are the problem. These days, Springer Nature, Elsevier, Wiley, and the like are basically giant operations that proofread, format, and store PDFs. That’s not nothing, but it’s pretty close to nothing.

No one knows how much publishers make in return for providing these modest services, but we can guess. In 2017, the Association of Research Libraries surveyed its 123 member institutions and found they were paying a collective $1 billion in journal subscriptions every year. The ARL covers some of the biggest universities, but not nearly all of them, so let’s guess that number accounts for half of all university subscription spending. In 2023, the federal government estimated it paid nearly $380 million in article processing charges alone, and those are separate from subscriptions. So it wouldn’t be crazy if American universities were paying something like $2.5 billion to publishers every year, with the majority of that ultimately coming from taxpayers.

(By the way, the estimated profit margins for commercial scientific publishers are around 40%, which is higher than Microsoft.)

To put those costs in perspective: if the federal government cut out the publishers, it would probably save more money every year than it has “saved” in its recent attempts to cut off scientific funding to universities. It’s unclear how much money will ultimately be clawed back, as grants continue to get frozen, unfrozen, litigated, and negotiated. But right now, it seems like ~$1.4 billion in promised science funding is simply not going to be paid out. We could save more than that every year if we just stopped writing checks to John Wiley & Sons.

PUNK ROCK SCIENCE

How can such a scam continue to exist? In large part, it’s because of a computer hacker from Kazakhstan.

The political scientist James C. Scott once wrote that many systems only “work” because people disobey them. For instance, the Soviet Union attempted to impose agricultural regulations so strict that people would have starved if they followed the letter of the law. Instead, citizens grew and traded food in secret. This made it look like the regulations were successful, when in fact they were a sham.1

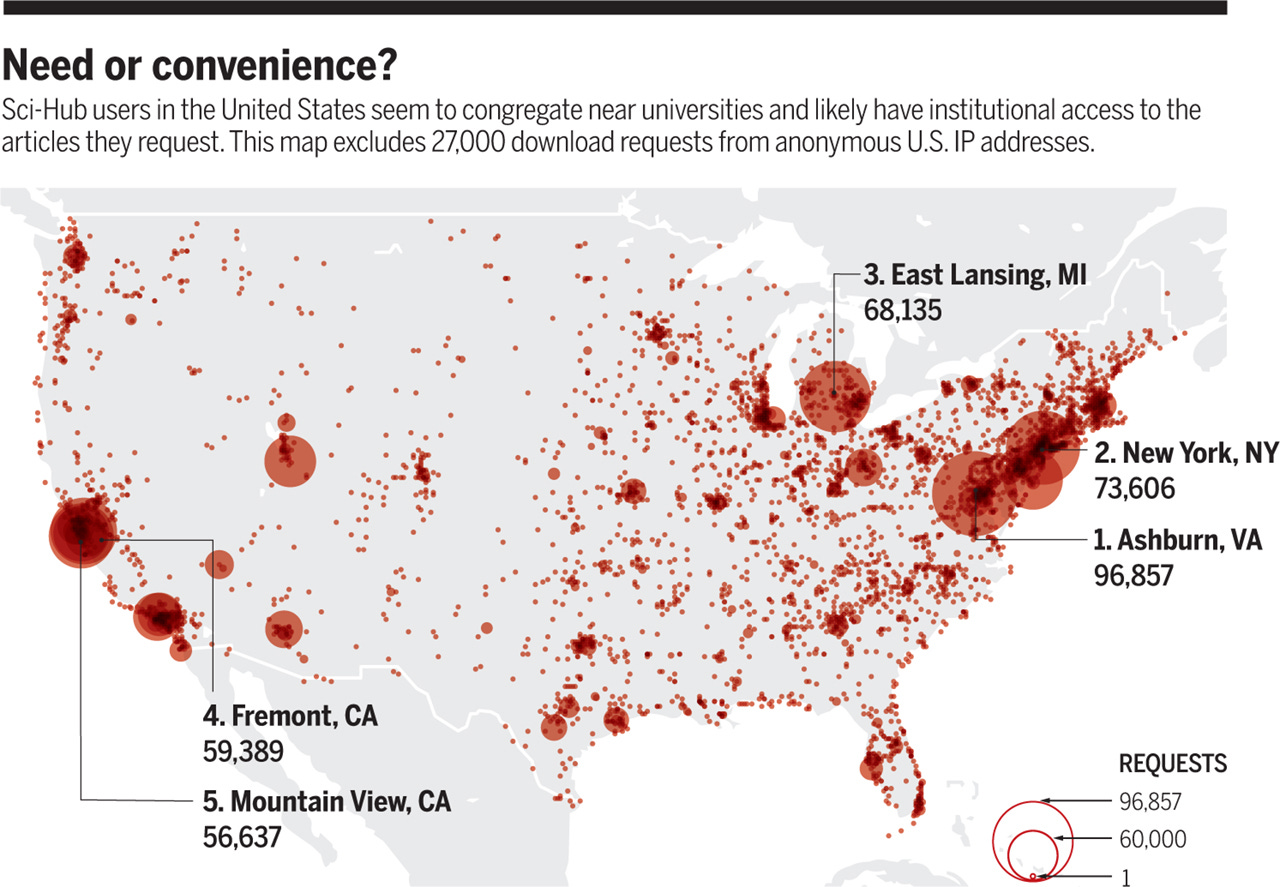

Something similar is happening right now in science, except Russia is on the opposite side of the story this time. In the early 2010s, a Kazakhstani computer programmer named Alexandra Elbakyan started downloading articles en masse and posting them publicly on a website called SciHub. The publishers sued her, so she’s hiding out in Russia, which protects her from extradition. As you can see in the map below, millions of people now use SciHub to access scientific articles, including lots of people who seem to work at universities:

Why would researchers resort to piracy when they have legitimate access themselves? Maybe because journals’ interfaces are so clunky and annoying that it’s faster to go straight to SciHub. Or maybe it’s because those researchers don’t actually have access. Universities are always trying to save money by canceling journal subscriptions, so academics often have to rely on bootleg copies. Either way, SciHub seems to be our modern-day version of those Soviet secret gardens: for-profit publishing only “works” because people find ways to circumvent it.

In a punk rock kind of way, it’s kinda cool that so many American scientists can only do their work thanks to a database maintained by a Russia-backed fugitive. But it ought to be a huge embarrassment to the US government.2

Instead, for some reason, the government insists on siding with publishers against citizens. Sixteen years ago, the US had its own Elbakyan. His name was Aaron Swartz. He downloaded millions of paywalled journal articles using a connection at MIT, possibly intending to share them publicly. Government agents arrested him, charged him with wire fraud, and intended to fine him $1 million and imprison him for 35 years. Instead, he killed himself. He was 26.

THE FOREST FIRE IS OVERDUE

Scientists have tried to take on the middlemen themselves. They’ve founded open-access journals. They’ve published preprints. They’ve tried alternative ways of evaluating research. A few high-profile professors have publicly and dramatically sworn off all “luxury” outlets, and less-famous folks have followed suit: in 2012, over 10,000 researchers signed a pledge not to publish in any journals owned by Elsevier.

None of this has worked. The biggest for-profit publishers continue making more money year after year. “Diamond” open access journals—that is, publications that don’t charge authors or readers—only account for ~10% of all articles.3 Four years after that massive pledge, 38% of signers had broken their promise and published in an Elsevier journal.4

These efforts have fizzled because this isn’t a problem that can be solved by any individual, or even many individuals. Academia is so cutthroat that anyone who righteously gives up an advantage will be outcompeted by someone who has fewer scruples. What we have here is a collective action problem.

Fortunately, we have an organization that exists for the express purpose of solving collective action problems. It’s called the government. And as luck would have it, they’re also the one paying most of the bills!

So the solution here is straightforward: every government grant should stipulate that the research it supports can’t be published in a for-profit journal. That’s it! If the public paid for it, it shouldn’t be paywalled.

The Biden administration tried to do this, but they did it in a stupid way. They mandated that NIH-funded research papers have to be “open access”, which sounds like a solution, but it’s actually a psyop. By replacing subscription fees with “article processing charges”, publishers can simply make authors pay for writing instead of making readers pay for reading. The companies can keep skimming money off the system, and best of all, they get to call the result “open access”.

These fees can be wild. When my PhD advisor and I published one of our papers together, the journal charged us an “open access” fee of $12,000. This arrangement is a tiny bit better than the alternative, because at least everybody can read our paper now, including people who aren’t affiliated with a university. But those fees still have to come from somewhere, and whether you charge writers or readers, you’re ultimately charging the same account—namely, the US government.5

The Trump administration somehow found a way to make a stupid policy even stupider. They sped up the timeline while also firing a bunch of NIH staffers—exactly the people who would make sure that government-sponsored publications are, in fact, publicly accessible. And you need someone to check on that, because researchers are notoriously bad about this kind of stuff. They’re already required to upload the results of clinical trials to a public database, but more than half the time they just...don’t.

To do this right, you cannot allow the rent-seekers to rebrand. You have to cut them out entirely. I don’t think this will fix everything that’s wrong with science; it will merely fix the wrongest thing. Nonprofit journals still charge fees, but at least the money goes to organizations that ostensibly care about science, rather than going to CEOs who make $17 million a year. And almost every journal, for-profit or not, uses the same failed system of peer review. The biggest benefit of shaking things up, then, would be allowing different approaches to have a chance at life, the same way an occasional forest fire clears away the dead wood, opens up the pinecones, and gives seedlings a shot at the sunlight.

Science philanthropies should adopt the same policy, and some of them already have. The Navigation Fund, which oversees billions of dollars in scientific funding, no longer bankrolls journal publications at all. , its director, reports that the experiment has been a great success:

Our researchers began designing experiments differently from the start. They became more creative and collaborative. The goal shifted from telling polished stories to uncovering useful truths. All results had value, such as failed attempts, abandoned inquiries, or untested ideas, which we frequently release through Arcadia’s Icebox. The bar for utility went up, as proxies like impact factors disappeared.

Sounds good to me!

CATCH THE TIGER

Fifteen years ago, the open science movement was all about abolishing for-profit journals—that’s what open science meant. It seemed like every speech would end with “ELSEVIER DELENDA EST”.

Now people barely bring it up at all.6 It’s like a lion has escaped the zoo and it’s gulping down schoolchildren, but when people suggest zoo improvements, all the agenda items are like, “We should add another Dippin’ Dots kiosk”. If you bring up the loose tiger, everyone gets annoyed at you, like “Of course, no one likes the tiger”.

I think two things happened. First, we got cynical about cyberspace. In the 1990s and 2000s, we really thought the internet would solve most of our problems. When those problems persisted despite all of us getting broadband, we shifted to thinking that the internet was, in fact, causing the problems. And so it became cringe to think the internet could ever be a force for good. In 1995, for-profit publishers were going to be “the internet’s first victim”; in 2015, they were “the business the internet could not kill”.

Second, when the replication crisis hit in the early 2010s, the open science movement got a new villain—namely, naughty researchers. The fakers, the fraudsters, the over-claimers: those are the real bad boys of science. It’s no longer cool to hate international publishing conglomerates. Now it’s cool to hate your colleagues.

Both of these shifts were a shame. The internet utopians were right that the web would eliminate the need for journals, but they were wrong to think that would be enough. The replication police were right to call out scientific malfeasance, but they were wrong to forget our old foes. The for-profit publishers are just as bad as they ever were, and while the internet has made them more vulnerable then ever, now we know they won’t go unless they’re pushed.

If we want better science, we should catch the tiger. Not only because it’s bad for the tiger to be loose, but because it’s bad for us to look the other way. If you allow an outrageous scam to go unchecked, if you participate in it, normalize it—then what won’t you do? Why not also goose your stats a bit? Why not publish some junk research? Look around: no one cares!

There are so many problems with our current way of doing things, and most of those problems are complicated and difficult to solve. This one isn’t. Let’s heave this succubus off our scientific system and end this scam once and for all. After that, Dippin’ Dots all around.

Seeing Like a State, 203-204, 310

For anyone who is all-in on “America First”: may I also mention that three of the largest publishers—Springer Nature, Elsevier, and Taylor and Francis—are all British-owned. A curious choice of companies to subsidize!

Don’t get me started on this “diamond open access” designation. If it costs money to publish or to read, it’s not open access, period. “Oh, you’d like your car to come with a steering wheel and brakes? You’ll need our ‘diamond’ package.”

I assume this number is much higher now. At the time, Elsevier controlled 16% of the market, so most people could continuing publish in their usual journals without breaking their pledge. I started graduate school in 2016, and I never heard anyone mention avoiding Elsevier journals at all.

The NIH has announced vague plans to cap these charges, which is kind of like saying, “I’ll let you scam me, but just don’t go crazy about it.”

For example, the current strategic plan of the Center for Open Science doesn’t mention for-profit journals at all.